One of my favorite stories about time and data is the 18th century quest to solve the longitude problem.

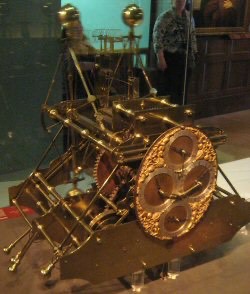

Hundreds of years before measuring cloud metrics became the infrastructure problem of our time, the best scientists and engineers of the world were trying to figure out how to determine longitude at sea. Latitude (north/south) was relatively easy but longitude (east/west) was nearly impossible. On land, an engineer could use a clock and measure the time the sun hit “noon,” its highest point in the sky, and then calculate the time difference from “noon” at an origin city. The earth takes 24 hours to rotate 360 degrees so each hour difference is 1/24th or a change of 15 degrees longitude. In the early 1700s, clocks needed pendulums to keep time and on the rolling ocean they were useless. It was impossible for ship captains to measure longitude resulting in lost lives, lost ships, longer routes, and incredible costs. The expense was so great that the British government (and other nations) offered the equivalent of millions of dollars for the Longitude Prize, sort of an X Prize of its day, to anyone who could solve this riddle. It was one of the most difficult international engineering problems of its day until John Harrison answered the challenge with his chronometer first designed in 1730 and changed navigation forever.

Almost three hundred years later, the problem of measuring time series data of your business, applications, and infrastructure is far from a solved problem. Today’s commercial and open source technologies provide a basic level of metrics but we are entering the hyper scale era of cloud. Enterprises are building cloud environments and applications that generate billions of metrics, requiring petabytes of storage, and detailed time granularity. In sum they need a better resolution of data in order to provide the deep insights they need. We are entering the age of high dimensionality (HD) metrics. Deep insights require the ability to query metrics and understand what is going on at both a granular level when needed but also at a high level to understand the systems and the business. Metric data today is defined by high dimensionality or high cardinality, meaning each metric can be broken down to several sub components or metrics. For example an event like a user click or purchase will be associated with a time stamp, a geo location, a device type, an OS version, et cetera. Querying and storing one dimension is simple but the cost and complexity to query and store all the metrics with all the dimensions quickly becomes not only cost prohibitive but also slow and impossible to use.

Rob Skillington and Martin Mao conquered this problem at Uber. During their time at Uber, the company’s monitoring grew to tens of billions of time series metrics and the need to query billions of metrics per second. Its cloud infrastructure went from hundreds of servers to thousands of micro services as the ride-sharing company exploded to over fifteen million rides a day. Martin and Rob evaluated and tried using the existing commercial and open source offerings, but quickly realized that at Uber’s rate of growth, these solutions would become unusable. Four years ago, Martin, Rob, and their team at Uber, started building M3, Uber’s metric and monitoring solution built on M3DB, a purpose built metrics database. Soon Uber was running its all of its operational metrics on M3. M3’s HD metrics provided fast and detailed resolution for Uber providing the equivalent of 4K resolution where in comparison, all other technologies looked like a VGA display. HD metrics are valuable not only for Uber but also for any enterprise running at scale in the cloud, and the community forming around the open sourced M3 project shows the unmet demand in the market.

I first met Martin and Rob when they were thinking about starting a company around M3. From our first meeting, I knew I wanted to help them build it. Meeting over a period of time, and in four different cities, Rob, Martin, and I discussed what a company around M3 could look like, and today they are announcing the private beta launch of Chronosphere.

I am fortunate enough to have led Greylock’s Series A investment and joined the board of Chronosphere. Investors dream of finding the intersection of large markets, great founders, and deep technology and Chronosphere has all three. This combination is rare magic, but to watch Rob and Martin work together is also magic. The two first met at Microsoft in 2011 after moving from Australia and have worked closely together since. To watch them debate technical details of a database, or discuss the strategic direction of the observability market, is to watch one of the great teams in technology and I wanted to be part of it.

Today, Chronosphere is bringing their first product, a hosted cloud service, to market. Chronosphere supports the most demanding enterprises that need deep insights from their metrics at global scale and reliability, while at a fraction of the cost of equivalent technologies.

In the 1700s, after the release of John Harrison’s sea watch that solved the longitude problem, it became standard to navigate the seas with a chronometer. Similarly, I believe it will become standard to navigate your cloud with Chronosphere.