Over the past few months, we’ve spoken to many CISOs and CIOs of F100 and mid-late stage startups about their top IT and security priorities. Unsurprisingly, AI and foundation models are top of mind for almost all of the executives we’ve spoken with.

The near-unanimous focus on AI is driven by the promise that foundation models could unlock a massive amount of value for enterprises going forward. Similar to the cloud platform shift kicked off by AWS with the launch of S3 and EC2, this transition represents a sea change in software development. Software will now be architected with a consideration for AI properties at the core.

As Mitchell Hashimoto from Hashicorp writes, “The hallmark trait of a platform shift is forcing an evolution in software properties… Traditional software can run in cloud environments, but it is inferior to software with equivalent functionality that embraces the dynamic, cloud-native approach.” AI-native software will display similar advantaged properties in the long run. For more context, you can read the recent essay by my partner Jerry Chen in which he discusses how foundation models “are the crucial element to what we called Systems of Intelligence six years ago.”

Recognizing this promise, enterprises are working to define their production roadmap for LLMs. The first step involves defining target use cases and prototyping capabilities across their business, often utilizing OpenAI or other large model providers. As they move towards production, challenges around data management, inference cost/latency, scalability, and security arise. Security is rapidly becoming a top priority, as AI represents a new and currently unsecured surface area (much as cloud did in a previous platform shift).

Compounding this new risk surface is that building these AI-native applications also requires an uncomfortable mindset shift in software development. Results in AI are often no longer deterministic, but stochastic – the same input may not receive the same output from AI models every time, meaning applications can behave in unexpected ways. Security controls over AI may themselves also depend on LLMs, adding another non-deterministic circular dependency. This shift towards unpredictability will further increase the scrutiny on models in the minds of security and risk professionals.

This is a clear opportunity for startups. At Greylock, we are actively looking for founders who are working at the intersection of security and AI to meet the needs of today’s CISOs and CIOs. I’ve outlined several use cases below.

Cloud Security As A Model

Following the cloud platform shift, we can look at the history of securing the shift to cloud as a possible parallel to forecast how AI security will develop. As in cloud security, it may take some time for the right durable architecture to emerge. The infrastructure layers of AI need to stabilize, preferred modes of AI consumption will become clearer, and user workflows will become more homogenous. Many of the largest and fastest-growing cloud security companies were started relatively recently… and years after the migration to cloud began! We encourage founders to think deeply about the right starting point and have the adaptability to shift with the market.

Current Priorities – Visibility, Governance, and Auditability

The immediate priority CISOs face is visibility, governance, and auditability to mitigate risk. Security and risk leaders want to understand the totality of foundation model-based solutions being used across the company – and who has access to them.

Prompt and response audit logs provide visibility into both inputs to and outputs from LLMs. Employees could input PII or other sensitive data into these systems that could later leak into responses after the model is fine-tuned on user inputs. Code that engineers take from the responses of code generation tools could include vulnerabilities or present IP or OSS license risk without attribution. A slightly more complex use case involves inheritance of permissions in information retrieval – as internal data is integrated and returned to model queries via in-context learning or fine-tuning, enterprises want to inherit existing controls on who can view which data.

In response, we’ve seen a wave of talented founders from both security and AI backgrounds launch companies offering a proxy/reverse proxy architecture to cover these CASB for AI and DLP use cases. This approach mediates access to LLM applications and monitors inputs and outputs from the systems. These products offer clear initial value, and provide one potential wedge towards unlocking the larger platform opportunity in securing AI.

Potential AI Security Use Cases

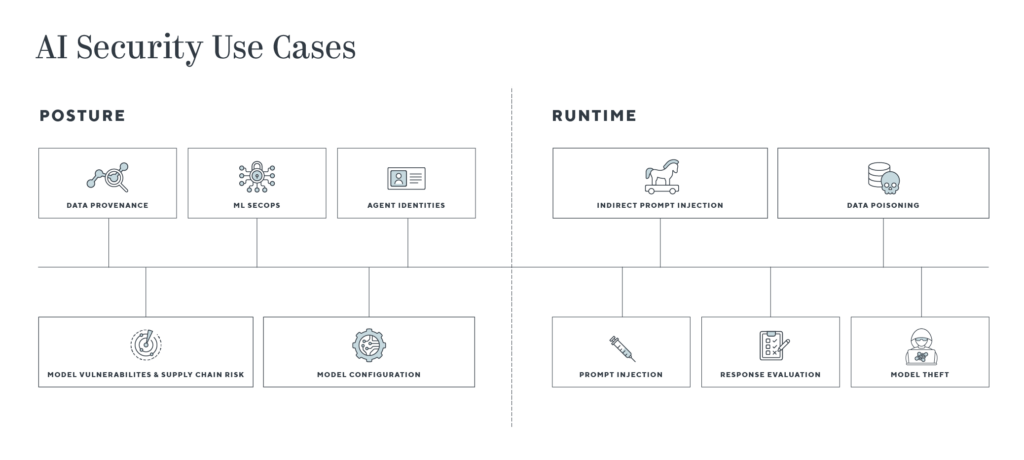

The diagram below represents potential use cases that companies may need to address on both the posture and detection and response sides. Details on the use cases are outlined below the graphic.

AI Posture Use Cases

In cloud security, demand for fixing misconfigurations in posture (CSPM) and vulnerability management has exploded in the last few years. Some parallels on the AI security side include:

- Data provenance and policies: Organizations need to ensure they (or their AI providers) are following regulatory controls on data storage, retention, and usage policies across geographies. At the most basic level, they need to be able to know where the data came from, and if it is sensitive or biased. Additionally, organizations must know if the data presents a legal risk from copyright or open-source license agreements.

- AI agent identities: As AI agents enter the enterprise, interact with systems, and are potentially granted permissions to modify state, they become a new identity surface area to govern. Organizations must know the extent of the access and functions these ephemeral agents have. RPA security has been discussed in the past, but AI agents could be less predictable and more powerful.

- Model registries/toolchain vulnerabilities and supply chain risk: Tools are needed to find vulnerabilities in the code of OSS models and toolchains (for example, this PyTorch vulnerability). 3rd-party dependencies in upstream services present another risk factor, e.g., this bug in Redis cache that affected ChatGPT. Safetensors provide an alternative to using pickle files for model weights that can execute arbitrary code when unloaded.

- MLSecOps and model testing: Instrumenting AI development pipelines with code would allow developers to both look for vulnerabilities as well as create a model BOM for tracing and auditability. Stress tests can also be integrated into this process to evaluate the robustness of models to different data types and common attacks pre-production.

- Model configuration: There is the potential for risks to emerge in settings and configurations as models become more productized, akin to the misconfigurations of cloud services.

While the provided use cases represent a few potential similarities to cloud posture, we note that others may emerge. In addition, this area is particularly dependent on how the market structure for LLMs evolves. Will they be consumed primarily as a highly consolidated set of 3rd-party APIs (OpenAI, Bard, Cohere)? Or as a broader distribution of 3rd-party vendors, open-source, and in-house models? Even if the market structure coalesces to the former, fine-tuning and memory modules present at least some level of customization on top of models that would need to be protected.

AI Runtime and Detection and Response

Within cloud security, we are seeing a resurgent interest in runtime protection and detection and response capabilities over the past year. Once posture is at appropriate levels, ongoing real-time visibility is the next level in enhancing environment security. While attacks on AI models so far seem more theoretical, that is likely to change as they grow in widespread adoption (and, again, the caveat from above holds – we expect new use cases and novel vulnerabilities in LLMs to emerge).

Some of the runtime and D&R capabilities needed for AI security include:

- Prompt injection: A malicious prompt can be used to attempt to jailbreak the system, provide unwarranted access, or steal sensitive data. Recent research has investigated systematically generating adversarial prompts via greedy and gradient search techniques.

- Indirect prompt injection attacks: Even if the prompt itself is well-constructed and not inherently malicious, systems with agent capabilities, plugins, and multiple components present additional risk. For example, the prompt could call out to a webpage with comments hidden in it designed to replace the original user prompt or inject a payload that creates a compromised connection with a malicious third party. Plugin chaining can also lead to permission escalation and compromise. Kai Greshake has enumerated a number of possibilities here.

- Some systems have treated these systems as arbitrary code execution engines, which is extremely risky – see this issue with Langchain.

- Response evaluation: A lack of evaluative controls for incorrect or toxic responses presents risk and potential reputational damage to companies. PII and copyright regurgitation are another legal risk here. Again, underlying the challenge of evaluation is a shift from deterministic outputs in software to stochastic ones.

- Data poisoning: Controls should be put in place against attackers inputting malicious, low quality, or unsupported data in order to exploit the model or degrade its performance.

- Model theft: Safeguards are needed to prevent attackers from extracting model weights or reproducing the decision boundary or behavior of a proprietary model.

If you’re building in this space or are a security professional exploring securing AI, please reach out to me at jrisch@greylock.com. We have not yet invested in the market, but are excited to speak with teams targeting the platform opportunity and examining where durable product value and depth can be built. There is no such thing as too early – we love brainstorming on market and product direction.

Greylock has a distinguished, long-term track record of Day One investments in both AI and cybersecurity. Greylock partners have invested in, helped build, and/or worked at many of the most important companies in cyber including Palo Alto Networks, Okta, Rubrik, Sumo Logic, Cato Networks, Abnormal AI, Skyhigh Networks, Demisto, Imperva, Check Point and Sourcefire.

Thanks to Gram Ludlow, Sherwin Wu, Jerry Liu, Simon Suo, Ankit Mathur, Asheem Chandna, and Malika Aubakirova for their thought partnership in this space.