For the past few decades, user research has inherently come with a tradeoff: scale or quality.

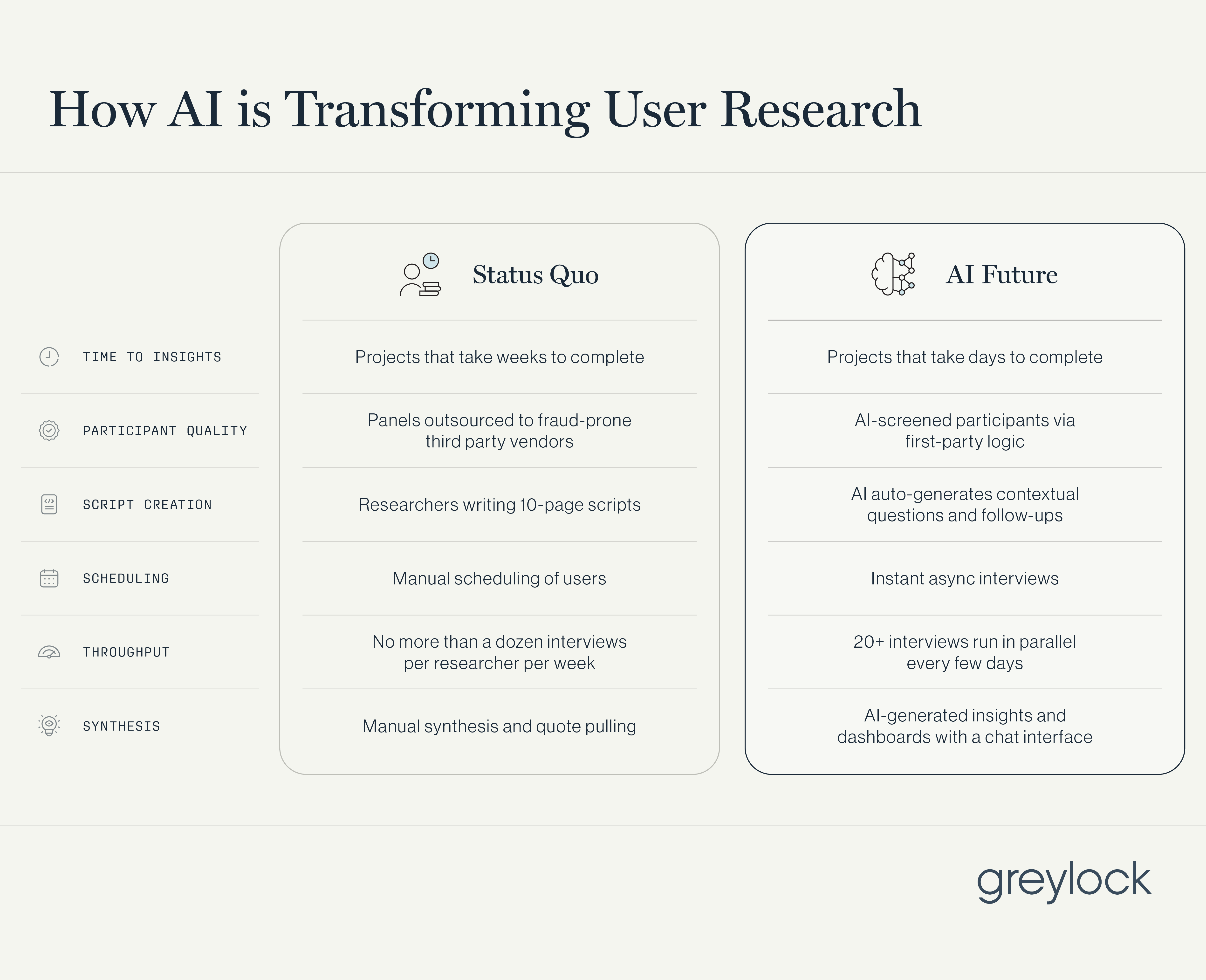

Teams could send unmoderated mass surveys (low fidelity, low effort) or run a handful of moderated interviews (high fidelity, high effort). The difference in turnaround time for interview-based research projects is stark; writing and battletesting questions can take up to a week, finding and scheduling participants can take multiple weeks, and conducting the full set of interviews can take a month if not more.

AI is breaking that tradeoff. Advances in voice and reasoning models make it possible to conduct high-quality, qualitative interviews with the speed and scale of surveys. The implications are significant: research is no longer bottlenecked by calendars, bandwidth, or headcount. Interviews become a primitive for building products—on-demand, async, and intelligent.

There’s a clear opportunity for startups. We at Greylock are actively looking for founders who are building AI-native user research products.

In this post, we outline why we believe now is a great time to be building AI-native solutions in user research, provide the current state of the market with incumbents and startups, look at how buyers are thinking about this category based on dozens of conversations, and share what we believe the winning AI user research platform will look like.

Why Now

- User research is increasingly a shared responsibility across orgs.

In modern orgs, research is conducted across product, design, growth, customer experience, and marketing functions—not just by dedicated user research teams. As insight becomes a shared responsibility, AI-native tools make it possible for anyone to run high-quality interviews without the scheduling overhead. - AI models can reason and talk, not just chat via text.

With advances in reasoning, contextual understanding, and audio interfaces, AI interviewers can probe, clarify, and ask follow-up questions like a real researcher would. This unlocks deeper qualitative insight and faster execution. In fact, we’ve found that participants generally share more when speaking with an AI than with a human. - AI enables teams to interact with insights and not just receive static deliverables.

User research is evolving from one-off reports into reusable, queryable assets. AI-native tools turn transcripts into interactive interfaces where teams can search for specific themes, extract quotes, and generate executive summaries and reports. They also allow users to retrieve targeted slices of insight, like clips of specific instances of user behavior, which makes it possible to identify product frictions or gaps without digging through hours of footage or full transcripts.

The Market Opportunity

The market opportunity is well-validated by incumbent success. Broadly, the legacy user research market spans two categories: software platforms and research service networks. Across both, multiple players have built multi-billion dollar outcomes on top of traditional, manual workflows.

- Software Platforms

These tools are built to scale surveys and research studies:- Qualtrics was acquired by SAP for $8B [1] in 2018 and later spun out at a $27B+ IPO valuation in 2021 [2].

- Medallia went public in 2019 at a $2.5B market cap [3] and was then taken private by Thoma Bravo for $6.4B in 2021 [4].

- SurveyMonkey (Momentive) IPO’ed at a market cap of $1.46B in 2018 [5].

- UserTesting IPO’ed at a market cap a little under $2B in 2021 [6] and was acquired by Thoma Bravo in a $1.3B take-private in 2022 [7].

- Research Networks / Services

These are large-scale agencies and panel providers that facilitate market research:- Nielsen and Kantar have long dominated syndicated data, consumer panels, and brand tracking—servicing enterprises through deeply manual and service-heavy models.

- Other networks like Ipsos, GfK, and Dynata fill similar roles with a mix of owned panels, survey execution, and outsourced analytics.

These platforms served a market that tolerated slow, expensive, manual workflows. AI-native research unlocks dramatically faster time-to-insight, broader usage across functions, and lower marginal cost per interview. If the legacy approach produced $25B+ in enterprise value, the AI-native one could go even further.

And, most importantly, this is a greenfield category. Most buyers we talked to can’t name more than one AI-native user research vendor. RFPs are rare.

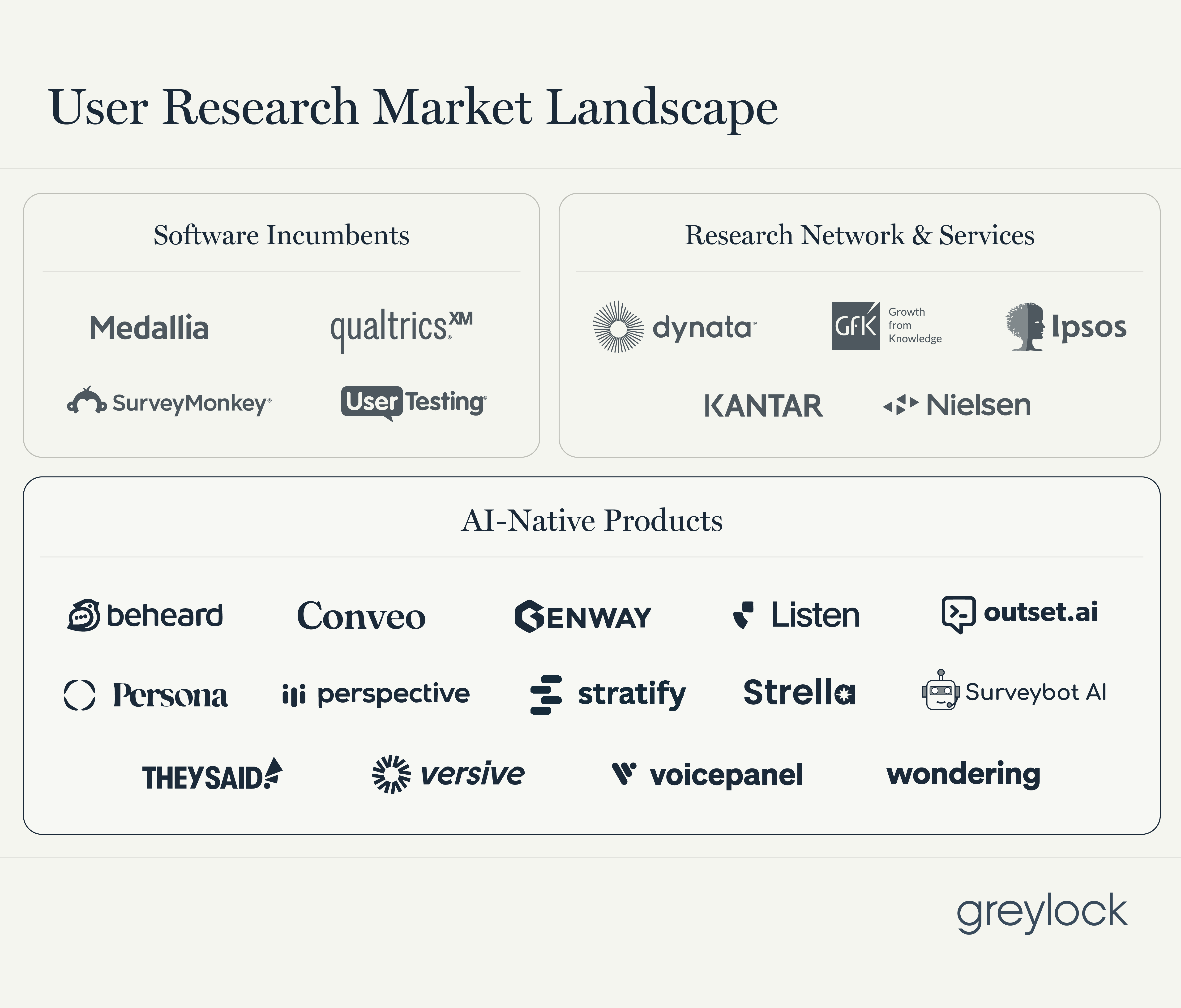

Here is a map of some of the incumbent and AI-native user research players in the market:

Buying Behavior

Across dozens of buyer conversations across product, design, growth, customer experience, and marketing teams in B2B and B2C organizations, we’ve seen two distinct purchasing behaviors for AI user research platforms emerge:

- Labor substitution

Smaller or resource-constrained teams view AI-led interviews as a replacement for additional headcount. Instead of hiring another researcher, a product manager or designer can run dozens of interviews autonomously. For instance, a team conducting around a dozen interviews per week can scale up to 20+ using an AI interviewer. - Research stack augmentation

Larger organizations integrate AI-native tools into their existing research workflows. These teams already conduct unmoderated surveys and traditional interviews, but use AI to accelerate research in new markets across various foreign languages, spin up projects faster, and increase overall velocity. Buyers we talked to are using AI tools to broaden research coverage without expanding team size.In both cases, AI is not just replacing workflows—it’s expanding the scope and frequency of research. What was previously quarterly is becoming weekly. This drives net-new usage across multiple teams, not just replacement spend.

User Research Reimagined with AI

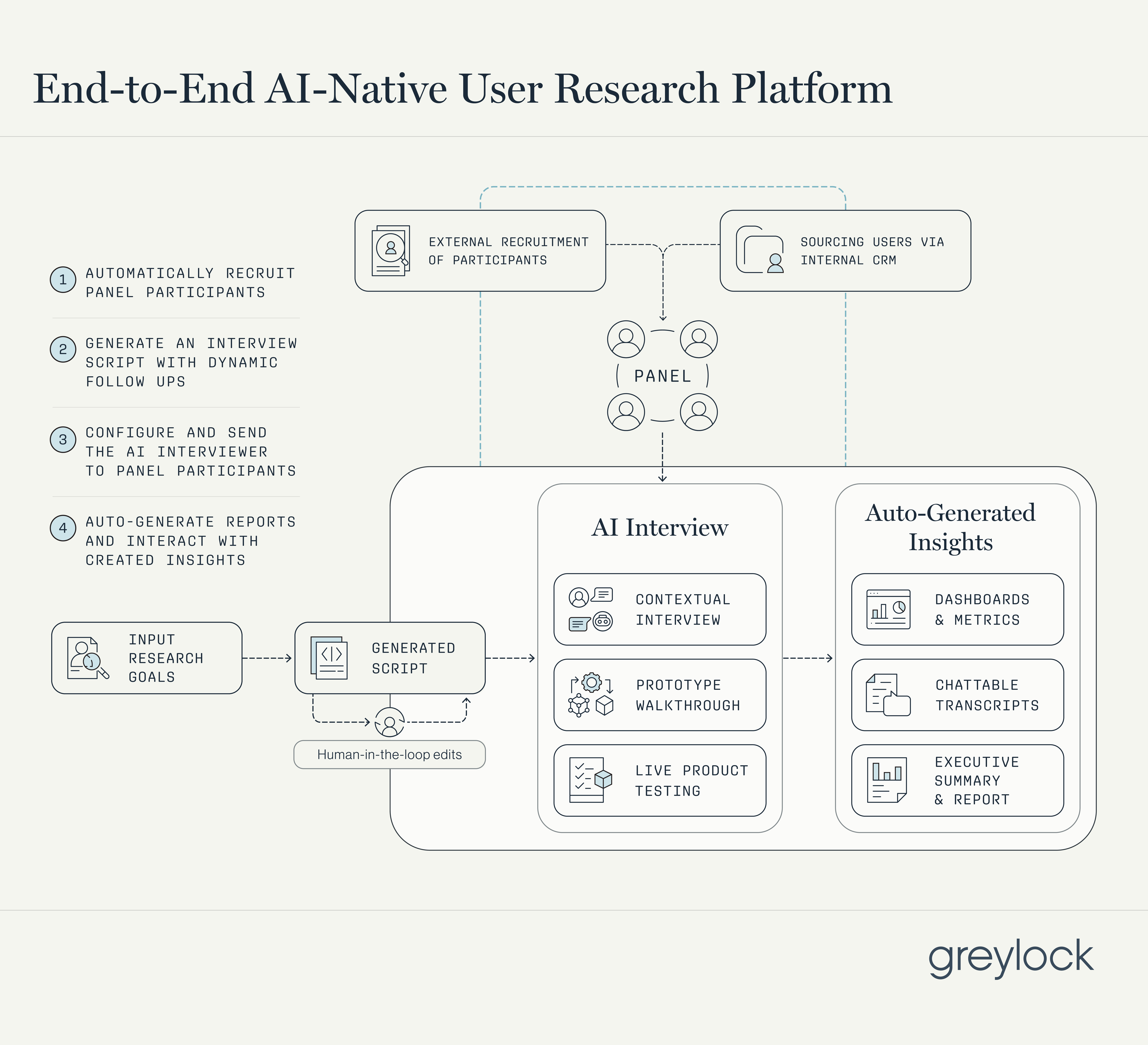

The next dominant platform in user research won’t look like a better survey tool or a scheduling assistant for interviews. It will be a system that fully reimagines how research is done end-to-end.

- Turns participant recruitment into a software primitive

Historically, sourcing and qualifying participants has been one of the most manual and error-prone parts of user research. Teams rely on spreadsheets, third-party recruiters, or external panels—many of which are expensive, slow, and prone to fraud. The next dominant research platform should treat participant recruitment as a programmable layer of the stack: fast, repeatable, and API-driven. The winning platform should:- Integrate directly with internal systems to pull from CRMs, product analytics tools, or customer support platforms. This would allow teams to instantly target real users based on behavioral data, without wrangling exports or working through a research coordinator. In some cases, the platform should support the ability to auto-send an AI interview to a user that makes qualifying actions.

- Recruit quality participants externally using AI screeners, as seen with companies like Mercor and other human data staffing startups. These systems apply filters, pre-interview checks, and real-time AI screeners—ranging from traditional surveys to conversational text flows or spoken audio prompts. The model should assess eligibility, disqualify low-quality respondents, and route participants to the right flows automatically, ensuring high-quality input at scale.

- Intelligently manage outreach and targeting rules, including automatic deduplication to avoid sending interviews to the same users repeatedly, dynamic incentive structures based on panel availability, and the ability to test different outreach or copy. This ensures participant freshness, increases response diversity, and reduces fatigue or over-sampling bias.

- Supports dynamic scripting and multiple interview modes

One of the most powerful unlocks in this new paradigm is how the AI handles scripting. Before the interview is conducted, the product should generate a base question flow and then decide in real time when to ask a follow-up, what that follow-up should be, and how far to diverge from the script based on the user’s response. This would enable teams to customize the level of structure: either opt for loosely scripted conversations with open-ended, dynamic exploration or enforce tighter scripts with defined prompts and limited branching. Either way, the AI would transform interviews from a static list of questions into an adaptive conversation engine while still keeping the conversations relatively consistent to be able to accurately compare reactions across users. The user research platform should be able to support at least the following three categories of interviews:- Contextual interviews: These would be traditional “talk to a user” sessions, but conducted asynchronously via text or voice with a dynamic AI interviewer that decides when and what follow-up questions to ask.

- Prototype walkthroughs: Users should be able to interact with embedded Figma designs while the model asks follow-up questions, observes behavior, and adjusts dynamically.

- Live product testing: Users should be able to share their screen and complete real flows while the model watches, asks questions, and records reactions in real-time.

- Automatically generates insights and makes every interview instantly queryable

Traditional research tools output raw transcripts and video files that require manual synthesis. Researchers spend hours tagging themes, extracting quotes, and compiling reports—work that doesn’t scale and is rarely reused. The winning AI-native user research platform would change that by structuring interviews as real-time data pipelines. The platform should:- Auto-generate summaries, themes, and structured reports immediately after each session, reducing post-processing time to near zero.

- Expose interviews via a chat interface, allowing teams to extract quotes, synthesize sentiment, and follow up on specific topics without reading full transcripts.

- Index and link insights across sessions, enabling teams to search and cluster by topic, persona, or pattern—turning interviews into a queryable repository.

- Surface prior context on each participant, allowing teams to see historical interviews, past surveys, and product activity tied to that user. This enables richer follow-up and ensures that AI interviews build on prior knowledge, not start from scratch.

- Suggest which participants to schedule human follow ups with, enabling researchers to more efficiently spend their time with users that give more nuanced insights into pain points and other user experiences.

The result: interviews become structured, reusable inputs that support decision-making across product, design, marketing, and customer experience teams without requiring a dedicated researcher to interpret them.

- Meets enterprise standards for governance, security, and control

To be viable in large, regulated enterprises, AI-native research platforms must be built for governance. Security, control, and auditability aren’t features; they’re table stakes. From InfoSec reviews to privacy policies, the following capabilities are required:- Privacy & Security: Proper handling of personally identifiable information, user consent for recordings, secure data storage, and safe integration with CRMs and internal tools.

- Bias & Hallucinations: Guardrails to prevent AI from going off-script, asking inappropriate questions, or injecting false conclusions into transcripts. The ability for a human to monitor multiple ongoing interviews and jump in when something goes wrong could also be compelling functionality.

- Customization: Controls for brand voice, tone, formatting, and compliance-specific language in how questions are asked and how summaries are written.

- Auditability: Full logs of who asked what, what was said, and how insights were generated to enable downstream review and traceability.

Enterprise buyers will not adopt AI-native research platforms unless they can trust the system to behave predictably, meet policy standards, and integrate cleanly into existing security architectures. The winners in this space will treat governance not as a feature, but as core infrastructure.

- Prices based on usage and value delivered to the customer

From a pricing standpoint, we’ve seen a few models emerge. Most platforms use flat software fees with tiered usage-based pricing, and apply overage charges once limits are exceeded. Usage is typically measured by:- Number of sourced panel participants

- Number of interviews conducted

- Number of seats used to conduct interviews (typically with unlimited viewer seats to consume insights)

However, given that replacing user research headcount with AI user research agents is one emerging purchasing behavior, there may be an opportunity to rethink pricing around labor ROI, not just software usage.

The shift from traditional to AI-native user research isn’t incremental – it’s a step-function change. This table summarizes what that transformation looks like across every stage of the process.

Furthermore, the platform should support a range of communication modalities between the human participant and the AI interviewer. At a minimum, this would include text-to-text, audio-to-text, and audio-to-audio exchanges. More advanced configurations may include video-to-text or video-to-audio, though we’ve found full video-to-video setups to be less critical in practice. This flexibility would ensure that the interview experience can be adapted to different user preferences, accessibility needs, and research goals—without compromising on insight quality.

An adjacent but emerging category to AI-native user research is the use of synthetic user personas, AI agents that simulate user behavior, preferences, and decision-making at scale. Early stage companies in the space demonstrate the power of using autonomous agents to model complex user interactions and generate synthetic feedback.

While promising, this approach is still early since most buyers today prioritize insights from real users. That said, synthetic personas may play a complementary role in prototyping, stress-testing ideas, or augmenting human feedback loops and research in the future.

Conclusion

User research is a broad and entrenched category that touches nearly every function in both B2B and B2C organizations. What was once owned by centralized research teams is now diffused across product, growth, design, customer experience, market insights, and consumer insights teams. That functional sprawl makes the buyer surface area for research tooling significantly wider—and the opportunity significantly larger.

The combination of voice and reasoning models is rewriting what’s possible—from how interviews are run to how insights are consumed. User research projects that used to take weeks now take days. And what used to require a dedicated researcher can now be done by a product manager, designer, or other non-researcher.

The market is massive. The incumbents have validated it. And the dominant AI user research platform hasn’t been crowned yet.

If you’re building something in this space—whether it’s infrastructure, end-user apps, or tooling that fits into the user research stack—we’d love to meet you. We are actively looking to meet with startups defining this new category.

Shoot a note to sophia@greylock.com. We’re excited to talk.

Thanks to Aatish Nayak, Bihan Jiang, Sara Xiang, Kevin Hou, Jerry Chen, Corinne Riley, and Christine Kim for their thought partnership.