Foundation models are at the top of mind for almost every company we speak with across industry and scale.

Whether companies are building new intelligent applications with large language models (LLMs) at their core, figuring out how to integrate these models into their existing product lines, or building internal apps to streamline operations, it is evident that LLM-based applications will become the dominant application paradigm over the next decade.

When speaking to companies beginning to develop LLM-based applications, several key gates emerged that these companies were working through:

- How do I get started?

- How do I tailor a LLM to my company’s needs?

- How do I improve performance, cost, and latency in production?

- How do I ensure data compliance?

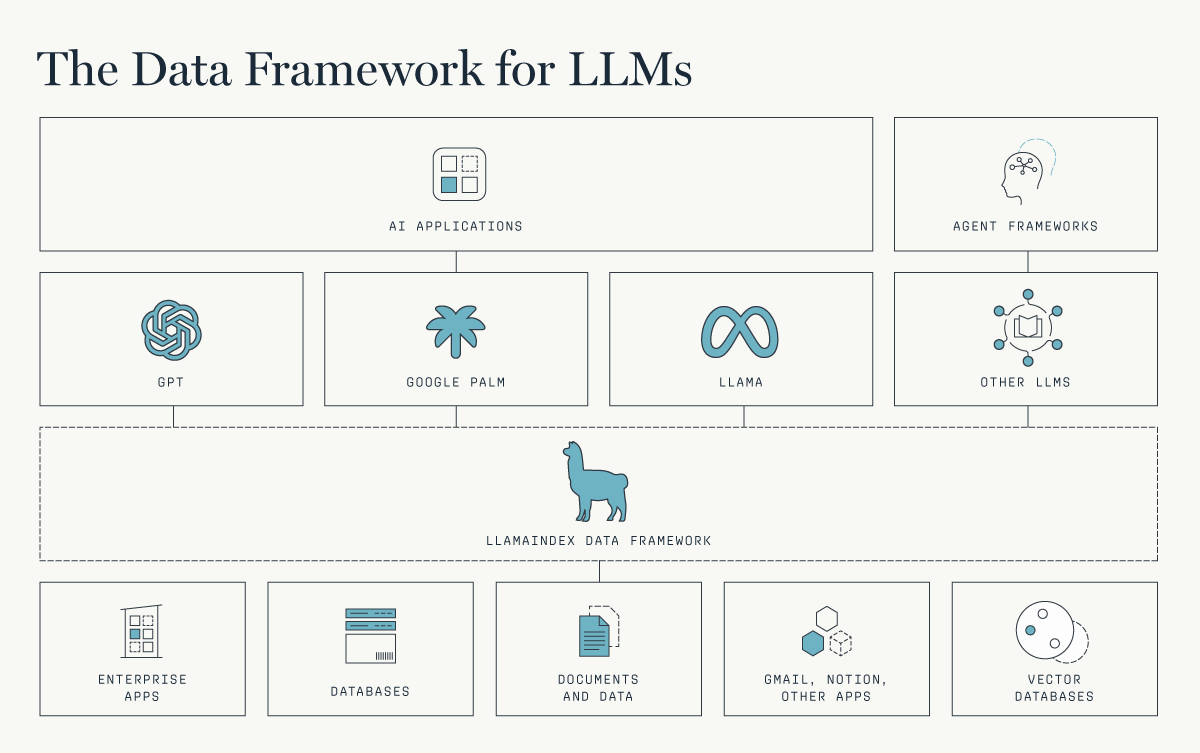

Answering these questions requires connecting an LLM to a company’s enterprise data – wherever it may reside. But bridging the two requires new application infrastructure, linking systems of intelligence to systems of record and other data sources. What is missing is a data framework that unleashes the power of LLMs on enterprise data.

This realization led us to meet Jerry Liu and Simon Suo, co-founders of LlamaIndex. Greylock is thrilled to back Jerry and Simon, and lead the seed round in LlamaIndex.

Jerry started LlamaIndex as a passion project while he experimented with GPT-3. Looking for ways to mitigate GPT-3’s limitations in working with personal data, he built LlamaIndex (fka GPT-Index) to bridge the data gap for personal projects. LlamaIndex is a data framework that provides data ingestion, data indexing, and a query engine for retrieval and synthesis of enterprise data for LLMs. All of these problems are solved by a performant and easy-to-use framework.

Once Jerry open-sourced LlamaIndex, he quickly realized that many others – ranging from hobbyists to engineers at large enterprises – shared the same challenge. He then teamed up with his former Uber colleague Simon, with whom he worked on ML research for the company, and the two decided to devote their full time to building the product and community.

With LlamaIndex, we believe the LLM application stack is beginning to emerge:

- Foundation models (OpenAI GPT, Google Palm, LLaMa, Inflection, Adept, Anthropic, and various open source models, etc.) provide the core reasoning capabilities.

- Data frameworks (LlamaIndex) for connecting LLMs to enterprise apps and data. Check out Llamahub for some quick integrations.

- Vector embeddings (vector DBs like Pinecone, Chroma, Weaviate, Zilliz, Qdrant or databases that support vector embeddings as well as search/analytics like Elastic, Postgres with PG Vector, Rockset, etc.)

- Agent frameworks (Langchain, HuggingFace Transformers Agent, AutoGPT, GPT Plugins, Fixie) for accelerating app development by working with agents, prompting, and chaining.

Not only do we believe the data component of the stack represents the best opportunity to build significant product depth and IP, it also corresponds to where significant value has accrued in other applications stacks. The promise of systems of intelligence is coming to fruition (with a few revisions – stay tuned!).

The AI community agrees with the promise. LlamaIndex currently has one of the fastest-growing communities in AI: 16k Github Stars, 20K Twitter followers, 200K Monthly downloads, and 6K Discord users.

If you’re interested in what the team is building, they are hiring!