In the new world order where cloud and LLMs intersect and GPUs are gold, where can startups build value?

Listen to this article >

Listen to this article >

Big cloud is finally being disrupted.

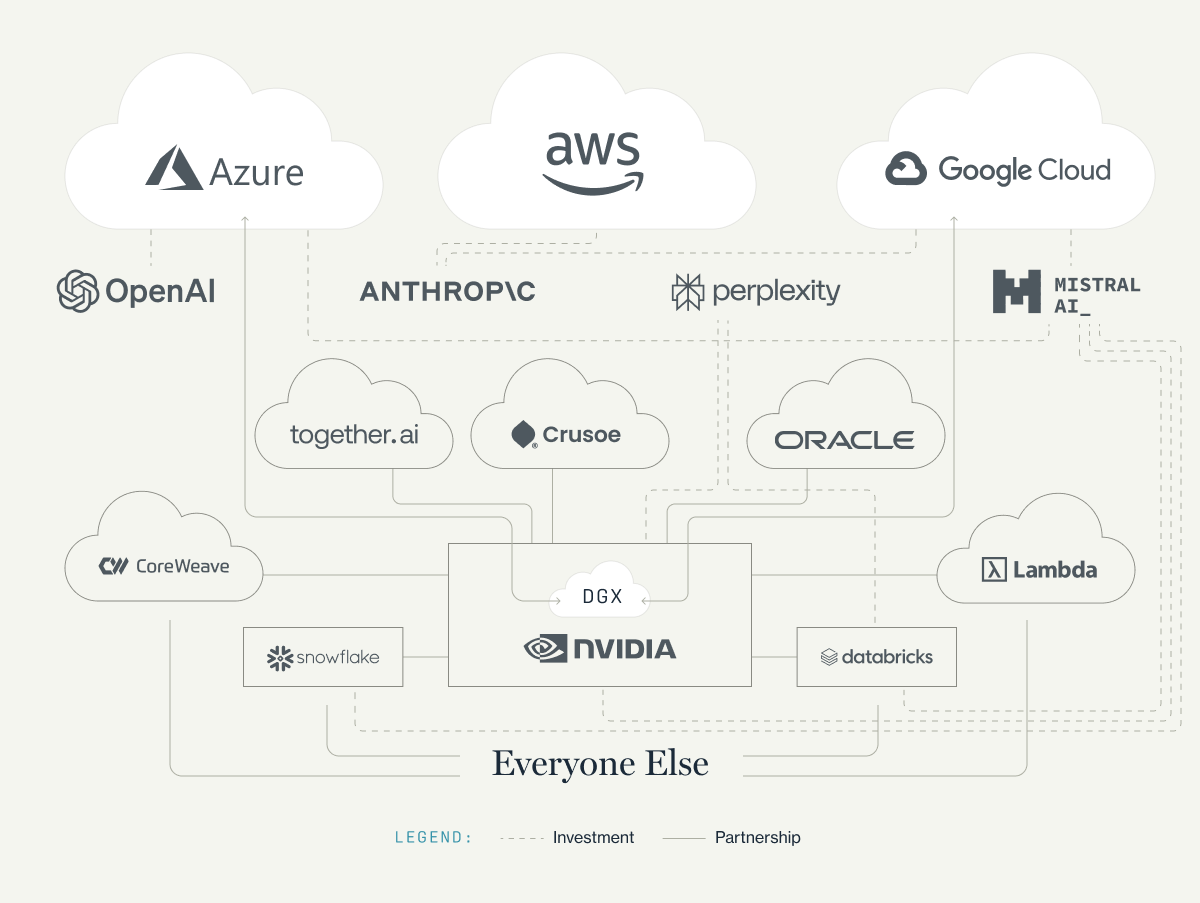

While the push for AI has made startups’ reliance on AWS, Microsoft Azure, and GCP higher than ever, it’s also increased the entire ecosystem’s reliance on NVIDIA.

Beyond its chokehold on GPUs (which may be temporary) and its numerous investments and partnerships with AI companies of all sizes, NVIDIA has also entered head-to-head competition with the Big 3 with its development of the AI-specific DGX cloud. Collectively, these moves have established NVIDIA as the central power broker in today’s AI revolution. This represents the first real shakeup among the cloud providers since they rose to dominance in the cloud platform shift.

Not only has NVIDIA been able to leverage advantages into critical partnerships with two of the three biggest cloud providers, it’s also leveled the playing field for small and mid-sized computing companies. Now, thanks to partnerships with NVIDIA, these companies have been able to claim (or reclaim) sizable portions of the cloud computing market.

As we wrote last year, NVIDIA’s partnership with Oracle was instrumental in bringing the legacy company back into the modern age. Databricks and Snowflake – already the poster children of success of the cloud transition era – dove headfirst into AI via collaborations with NVIDIA and become powerful players in the AI computing ecosystem. Mosaic has continued to scale after Databricks’ acquisition, establishing the data platform as a leader in training custom language models for customers and producing state of the art models like DBRX, while the Snowflake team now provides a full stack AI platform. These developments have enabled both Databricks and Snowflake to now offer attractive distribution partnerships for AI startups as they look to AI to continue challenging the Big 3.

Additionally, NVIDIA’s partnerships and sizable investments have enabled a growing class of small, VC-backed, AI-specific cloud companies to thrive. Large rounds of financing and/or GPU allocation from NVIDIA has enabled companies like Coreweave, Together.ai, Crusoe, and Lambda labs to acquire customers with flexible compute options and availability compared to the often constrained Big 3 clouds.

All of this shows that we have entered the “Big 4” era of cloud. The Big 4 collectively power the entire ecosystem and often lead the largest financing rounds, each racing to establish crucial partnerships with startups to build up their AI edge.

The record-breaking rounds are heavily tilted towards LLM providers. Microsoft alone put $13B into OpenAI in 2023, AWS recently invested $2.7B in Anthropic, and Perplexity.ai’s rapid ascension to higher and higher valuations was achieved in part by backing from NVIDIA.

These new power dynamics, funding patterns, unique incumbent-challenger partnerships, and collective impact on potential exit strategies mean startups must approach competition in the AI era differently.

Today, there are several areas where startups have the advantage, where more opportunity still exists, and areas where we believe incumbents are most likely to win. Many of our original guidelines for competing with the hyperscale clouds still hold true (e.g. avoiding direct competing with the incumbents; moving up the stack; establishing deep IP; owning the developer community, etc).

We’ve compiled a list of trends we’ve observed through our daily work as investors, through conversations with founders, enterprise executives, and research, and through our ongoing data collection and analysis for our Castles in the Cloud project – Greylock’s interactive data project to map funding and opportunities in the cloud ecosystem.

As outlined above, the majority of investor money are going into compute and foundation models, which means startups must look to other layers of the stack to build durable value. Below, we’ll outline how startups are finding traction with tooling, infrastructure, and various approaches at the application layer including vertical and horizontal apps, security, codegen, and robotics.

We’ll start from the bottom and work our way up the stack.

Foundation Models

The Intersection of the Cloud and LLMs

While the engine of AI is fueled and powered by the Big 4, it’s driven by startup-provided large language models. With massive funding rounds and often exclusive distribution deals, models from OpenAI, Anthropic, and Mistral have become deeply entrenched in the Big 4’s AI strategy. Partnerships like Microsoft has with OpenAI and AWS with Anthropic highlight the increasing concentration of power between the Big 4 and LLMs.

Even Google, the outlier as the lone cloud provider developing its own foundation models in-house, has invested heavily in outside LLMs.

The necessary intersection of the Big 4 and LLMs makes for a crowded field. While we tracked 10 new challengers in the LLM provider category in our Castles in the Cloud project, we don’t expect this field to grow much larger. While we see some potential for domain-specific models to compete (as well as code-specific models that could serve as the basis for AI engineering co-pilots or agents) these are resource-intensive undertakings with significant unknowns still present.

Similarly, compute is another capital-intensive undertaking for startups, and which has received considerable investment from both the Big 4 and venture capital investors. As mentioned earlier, partnerships and investments from NVIDIA have allowed smaller AI-specific clouds to thrive, and there has been an overall boom in funding for the sector. In our annual update to Castles in the Cloud, we tracked nearly $1.4 billion raised by computing startups in 2023. This represents a sixfold increase from the $232M invested in 2022. The trend has continued well into 2024 with examples like Foundry. The company just came out of stealth with a $350 million valuation to continue developing its public cloud purpose-built for ML.

For now, we encourage founders to approach opportunities higher up the stack.

DevTools & Infrastructure

At this point in the AI transition, we see tremendous opportunity for startups building infrastructure; however, startups must focus on navigating the landscape. As the capabilities offered by the foundation models improve, the cloud providers develop additional services, shifting how applications prefer to consume infrastructure. Therefore, infrastructure must be built to account for this quicksand, but also must be agile and developer focused to avoid the quicksand themselves. For example, investors and AI builders alike have debated the long-term durability of standalone vector stores: standout companies like Pinecone and Weaviate raised a combined $150m last year and have attracted many customers, while others customers have preferred add-ons to existing databases like pgvector and MongoDB Atlas vector database.

As LLM-based applications are poised to become the dominant application paradigm over the next decade, we believe the data component of the stack represents the best opportunity to build significant product depth and IP. Developers need a way to connect to enterprise data and tailor an open source model to their specific needs, which has given rise to a class of startups like LlamaIndex, providers of a data framework to easily build custom RAG applications.

We also see significant demand for tools to make open-source models more effective given the attractive control, performance, and cost open-source models offer. Companies like Predibase make it possible for developers to build smaller models with better latency and performance by fine-tuning open-source models. The company recently released LoRA land, a collection of 25 fine-tuned Mistral-7 models reported to consistently outperform base models.

Whether custom, fine-tuned, or base models, serving models effectively at scale is one of the biggest blockers we see from growing startups and enterprises. Combining fast, performant inference with a delightful developer aesthetic and workflow allows companies to focus on getting their model and product into deployment – that’s why companies like Baseten have seen explosive growth recently.

There are also opportunities for startups to address one of the primary challenges of working with LLM applications: since they are non-deterministic, enterprises cannot reliably predict the quality of their responses (nor whether a small change in the prompt due to the use of proprietary data could impact the output). Observability tools have become required products in the age of AGI, as the strength of a startups’ IP moats is only as good as their performance. Recently, we invested in Braintrust, which offers developers a toolkit for instrumenting code and running evaluations, enabling teams to assess, log, refine, and enhance their AI-enabled products over time.

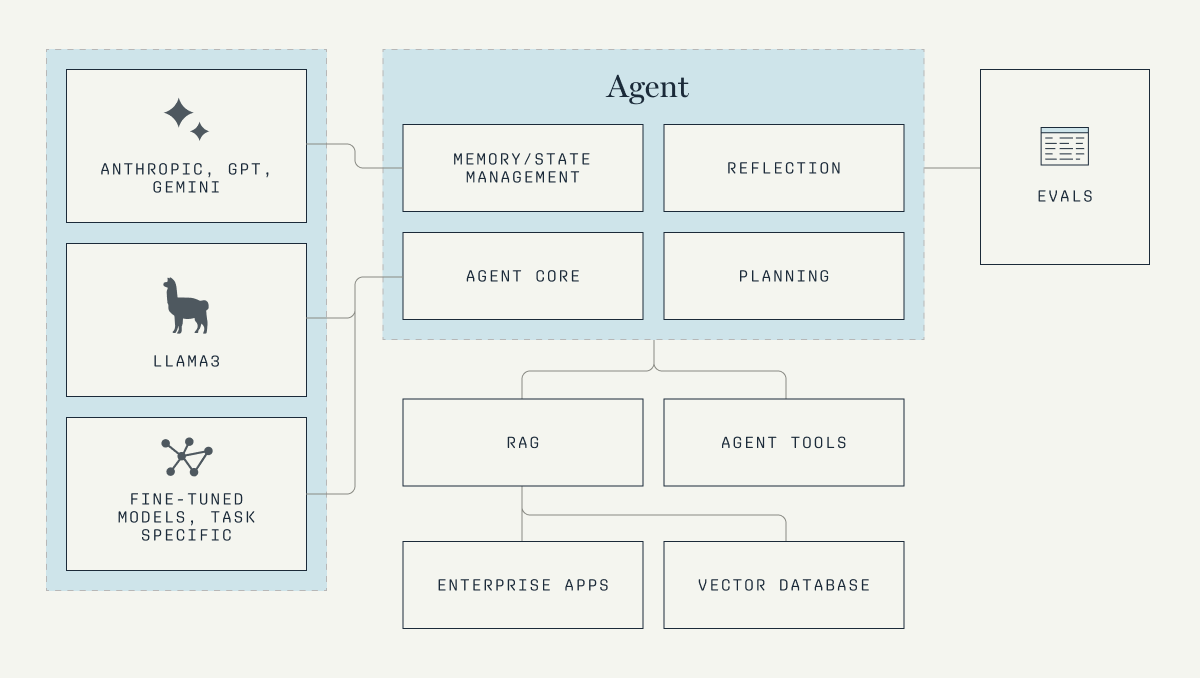

Agents

The emergence of agentic capabilities underlies many of the opportunities at the application layer. Many leading AI application companies are building their core IP at the agentic layer, orchestrating a number of 3rd-party and fine-tuned models as well as domain-specific tools to reason out different tasks.

We’ve seen this paradigm begin to play out across the vertical and horizontal domains above as well as security, root-cause-analysis in observability, AI engineers like Devin, and more. This approach has led to some of the fastest-growing companies and provides a deeper moat than some of the past wrappers on top of LLMs.

While we have seen a number of agent frameworks, most companies we’ve spoken to end up largely building their own orchestration system. Adept, which is developing custom AI agents for the enterprise, has taken this tactic. Over time, we believe this approach can become more standardized while also reliably providing industry-specific utility. In the interim, there is opportunity in providing agent-specific tools.

Application Layer

Vertical & Horizontal Plays

Continuing last year’s trend of increasing vertical specialization, we’re seeing more startups carving out space for themselves with ML apps. We added 10 new entrants to the category in our Castles in the Cloud market map section, including a mix of vertical and horizontal apps.

As our colleague Christine Kim wrote, vertical-specific AI approaches are showing to be an attractive option for startups and don’t appear to be an area of focus for the Big 3. For example, legal-focused Harvey and EvenUp launched with funding in 2023, while existing companies in the database such as Adept and Tome also raised new funding. We expect to see more horizontal apps for functions common to all enterprise organizations (such as sales and recruiting), as companies look to supplement or automate less strategic tasks in those domains. However, it’s unclear how much the truly core horizontal categories will be disrupted by AI startups: Unlike the incumbents of past generations that struggled to adapt to the cloud transition, today’s incumbents (so far) seem to be doing a far better job evolving with the latest platform shift.

Security

Security continues to be an all-important issue, and the new risks associated with AI have accelerated the pace of new company creation. In 2023, we added 13 new startups in the Castles in the Cloud database, and the sector racked up nearly $800 million from venture capital investors.

We spoke with dozens of CISOs and data teams leveraging GenAI in conjunction with multiple cloud data platforms. Again and again, they spoke of the need for a new approach to cloud data security to ensure protected information such as regulated data and core IP is not ingested into AI models. We incubated and invested in Bedrock, which has developed a frictionless data security as a service platform tailored to the GenAI era, and are actively looking for investments in other parts of the AI security stack.

Security startups are also using AI agent approaches to revolutionize SOC, identity, and improve remediation in apps and infrastructure. Kodem, which Greylock invested in last year, leverages deep runtime intelligence to understand true application risk. To provide users with a complete picture of possible vulnerabilities to the actual applications in use as well as predict potentially exploitable functions, the company merges its runtime intelligence capabilities with LLM-based techniques.

Codegen

One of the most exciting opportunities for startups today is in developing AI tools that can understand and “speak” code just like a human engineer. Our colleague Corinne Riley is heading up our work in this area and is focused on three primary approaches to this goal: AI co-pilots that enhance existing workflows; AI agents to replace engineers; and code-specific models trained on a mix of code and natural language. In 2023, we added the codegen category to the Castles in the Cloud database and tracked nine new startups. You can read more about Greylock’s thesis on this emerging sector in Corinne’s upcoming essay.

Robotics

Foundation models have also provided a boon to robotics fundings in our data set last year as investors recognize the promise of advances in robotics and hardware combined with foundational models.

We expect the trend will accelerate further this year. Figure recently raised $675m from OpenAI, NVIDIA, Microsoft, and others, and announced a partnership with OpenAI to develop robotics foundational models that allow them to process and reason about language. Physical Intelligence raised to bring general purpose AI into the physical world based on the founders’ research at Stanford.

If you are building at the intersection of AI and robotics, we’d love to chat. (jchen@greylock.com and jrisch@greylock.com)

Conclusion

The AI revolution has created a new world order. Startups navigating this increasingly competitive landscape will undoubtedly turn to the Big 4 for resources and partnerships, but can carve out space for themselves at higher layers of the evolving AI stack. From apps to agents, security to codegen and robotics, we are eager to work with founders building where the incumbents prefer to outsource innovation to the challengers.